The Great AI Infrastructure Capital Misallocation

A Personal Meditation and Long Story of Concentrations, Limitations, and Corrections in the AI Industry

When Cisco lost 89% of its valuation in March 2000, we had learned infrastructure build-out follows S-curves, not exponential ones. Today’s AI infrastructure leaders echo this pattern with uncomfortable precision.

Before examining these risks in detail, I remain deeply optimistic about artificial intelligence as a transformative technology over the coming decades. Large Language Models have proven their utility and will persist as valuable tools. The question isn’t whether AI will transform the economy. It will, just as the Internet did. The question is whether current valuations have already priced in outcomes that physics, economics, and human psychology make impossible to achieve.

Multiple converging storm clouds paint a picture of a market approaching hefty repricing: extreme concentration risks in AI supply chains, the hard limits of Moore’s Law, diverging unit economics of AI infrastructure, unprecedented resource constraints, and shifting sentiments.

Part 1: Concentration Risks in the AI Infrastructure Supply Chain

The AI infrastructure revolution contains profound geopolitical undercurrents absent during the Internet buildout. While both required substantial energy and manufacturing capacity, AI’s energy demands run 10-100x higher per computation. A single GPT-4 training run consumed electricity equivalent to powering thousands of homes annually. More critically, where Internet infrastructure could be manufactured across dozens of countries, cutting-edge AI chips emerge from vanishingly few facilities.

Manufacturing Chokepoints

TSMC produces approximately 90% of the world’s most advanced processors, controlling 67% of the global foundry market. This concentration creates a physical bottleneck where a single earthquake, blockade, or crisis could halt global AI development overnight. ASML’s absolute monopoly on extreme ultraviolet (EUV) lithography machines (€200 million devices essential for producing cutting-edge chips) compounds this vulnerability. The entire AI ecosystem depends on two companies in two small geographic areas, Taiwan and The Netherlands.

Beyond these headline dependencies, the supply chain features multiple hidden vulnerabilities. Japan controls 90% of photoresist chemicals essential for chip production. South Korea dominates memory chips through Samsung and SK Hynix. The U.S. controls critical design software through Cadence, Synopsys, and Mentor Graphics, without which even Chinese firms cannot design competitive chips.

Nations scramble to reduce dependencies, but barriers prove formidable. The U.S. CHIPS Act’s $52 billion and Europe’s €43 billion Chips Act pale against the estimated cost for genuine semiconductor autonomy. (sidenote: AI-sovereignty might equally be a future driver for chipset-manufacturer revenues). Building a single cutting-edge fab costs $20-30 billion and takes 3-5 years, assuming access to ASML’s machines, which have multi-year waiting lists. China’s domestic lithography equipment currently in development targets 28nm production, while SMIC has successfully demonstrated 7nm chip production (and working on 5nm production) using imported DUV technology with multi-patterning techniques, albeit at significantly lower yields and higher costs than TSMC’s EUV-based 3nm production.

Complete semiconductor autonomy appears practically unachievable for any single nation, given the technological complexity and globally distributed expertise required. This creates a paradox where nations might want to hinder each other from developing true autonomy, while a complete decoupling is not feasible. This creates a Mutually Assured Destruction (MAD) dynamic (indeed, like nuclear, AI is a matter of national security), where China controls 80% of rare earth processing while depending on Western technology; the West needs Chinese materials while restricting technology exports. Each side’s leverage risks triggering cascading retaliation, yet neither can afford complete decoupling.

This concentration transforms semiconductor access into geopolitical currency. Taiwan’s “silicon shield” strategy bets neither China nor the U.S. can afford disrupting chip production. The Netherlands faces pressure from Washington and Beijing over ASML export licenses, each shipment carrying diplomatic weight once reserved for arms deals. U.S. export controls demonstrated that cutting chip access can cripple technological development, with China’s AI industry losing an estimated 1-2 years of progress from October 2022 controls alone.

Apart from Taiwan’s chipset ecosystem, The Netherland’s ASML’s allegiance to European sovereignty is equally apparent, with making investments in European Mistral AI and ousting Chinese managers from strategic Dutch companies, such as Nexperia to protect the European supply of semiconductors for cars and other electronic goods.

Critical Materials Monopolies

The AI infrastructure boom creates unprecedented demand for specific materials with severely limited sources. Unlike the dotcom era’s reliance on common materials, modern AI chips require exotic elements with extreme geographic concentration.

China controls 85-90% of global rare earth processing capacity despite holding only 37% of reserves. As Philip Andrews-Speed of the Oxford Institute notes, “China has been working on improving its ability to do this for two decades,” producing 70 kilotons of refined rare earths (REEs) annually whilst controlling nearly the entire value chain. The 2010 rare earth crisis, when prices spiked 600% following export restrictions, demonstrated this leverage, though evidence suggests broader quota reductions rather than targeted sanctions.

More acutely, Ukrainian companies Ingas and Cryoin supplied 45-54% of global semiconductor-grade neon before Russia’s 2022 invasion. Neon is important as it’s the primary buffer gas in excimer lasers used for semiconductor photolithography. Both companies ceased operations, with Ingas’s Mariupol facility producing 15,000-20,000 cubic meters monthly now shuttered. The disruption drove neon prices up 600% in spot markets, echoing the 2014 Crimean crisis spike.

China produces 94% of global gallium and 83% of germanium, both critical for high-performance chips. Beijing’s July 2023 export restrictions, escalating to a December 2024 ban on U.S. exports, weaponised what Deng Xiaoping once called China’s “rare earths advantage.” Unlike oil with multiple suppliers, these materials face 5-10 year development timelines for new sources.

But perhaps most overlooked is helium. The U.S. Federal Helium Reserve’s 2024 privatisation ended its role supplying 30% of domestic needs. Qatar’s 30% global market share creates new dependencies for cooling quantum computers and next-generation AI chips. Unlike other elements, helium literally escapes Earth’s atmosphere when released, making it truly non-renewable.

Part 2: The Economics Don’t Add Up

Markets themselves exhibit erratic behaviour before corrections, where increased volatility, correlation breakdowns, and narrative confusion often precede crashes.

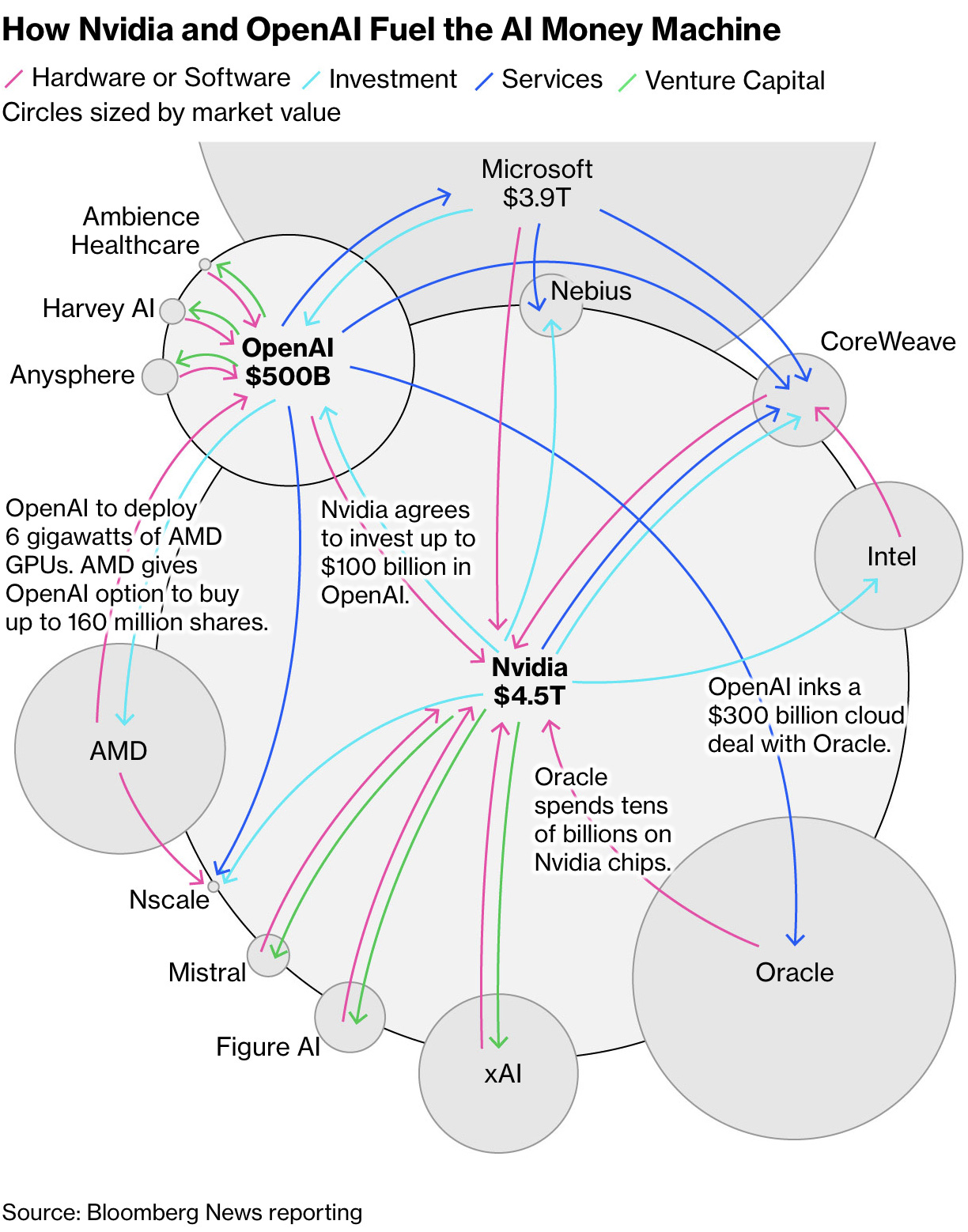

The AI sector now displays these warning signs through what Bloomberg’s reporting reveals as an unprecedented web of circular financing arrangements. Nvidia to invest up to $100 billion in OpenAI to fund data centre construction, while OpenAI commits to filling those facilities with Nvidia chips. OpenAI then struck a $300 billion deal with Oracle for data centres, with Oracle spending billions on Nvidia chips for those same facilities, creating a closed loop where money flows from Nvidia to OpenAI to Oracle and back to Nvidia. Further, Nvidia seems to buy its way into the supply chain by investing $5 billion in Intel (after all, Intel bought out ASML’s stock of EUV machines in 2024). This reminds me of 1990s circular deals that centred on advertising and cross-selling between startups to inflate perceived growth.

The “Magnificent Seven” (Apple, Microsoft, Google, Amazon, Nvidia, Meta, Tesla) now comprise over 30% of the S&P 500’s total market capitalisation, exceeding the concentration seen at the peak of the dotcom bubble. But the scale of these arrangements dwarfs previous technology bubbles.

US tech companies have raised approximately $157 billion in bond markets alone this year (a 70% increase from last year) with Oracle’s $18 billion jumbo bond sale drawing nearly $88 billion in demand. Meta Platforms has lined up $29 billion of private credit for a Louisiana data centre, whilst banks prepare a $38 billion package for Oracle-linked campuses in Texas and Wisconsin. This debt-fuelled expansion occurs despite Oracle’s free cash flow flipping negative for the first time since 1992, even as its total debt pile surpasses $100 billion, as Bloomberg’s Ed Ludlow reports (a further, personal, concern is the amount of CLO-exposure in debt financing).

The opacity of these financial arrangements masks systemic vulnerabilities. Elon Musk’s xAI exemplifies this creative engineering, structuring a $20 billion funding round split between $7.5 billion equity and $12.5 billion debt via a special purpose vehicle specifically to purchase Nvidia processors, which xAI would then rent out for five years. CoreWeave, having received a 7% stake from Nvidia and $350 million from OpenAI, has expanded its cloud deals with OpenAI to $22.4 billion, further entangling the sector’s key players.

Kindleberger’s “Manias, Panics, and Crashes” documents a late-stage bubble psychology with similar patterns, where companies desperately tried to justify valuations that assumed impossible growth rates. Today’s AI sector displays these characteristics magnified, with Capex commitments at unsustainable levels for hyperscalers. OpenAI’s Sam Altman claims he wants to spend “trillions” on infrastructure whilst the company burns through cash and doesn’t expect to be cash-flow positive until near decade’s end, according to Bloomberg’s analysis. Sequoia Capital’s analysis frames this as “AI’s $600 billion question”, or where will revenue come from to justify this spending?

A Harvard Kennedy School researcher who worked through the dot-com era observes that whilst “1990s circular deals centred on advertising and cross-selling between startups to inflate perceived growth, today’s AI firms have tangible products but their spending still outpaces monetisation.”

The concentration of capital in AI infrastructure mirrors historical patterns where impossible growth assumptions preceded crashes, but with novel systemic risks. A Bain & Co. report predicts AI firms’ revenue could fall $800 billion short of what’s needed to fund computing power by 2030, whilst MIT researchers find that 95% of corporate AI pilots don’t deliver returns. Morningstar analyst Brian Colello warns that “if we get to a point a year from now where we had an AI bubble and it popped, this deal might be one of the early breadcrumbs [...] If things go bad, circular relationships might be at play.”

This isn’t merely Internet infrastructure redux. It’s a new form of technological sovereignty where control over dozens of facilities determines national AI capabilities. Unlike the distributed Internet where packet routing bypassed damaged nodes, AI’s hardware dependencies create catastrophic single points of failure. Oracle’s cloud business generated just 14 cents gross profit for every dollar in sales from renting Nvidia-powered servers, according to internal documents reported by The Information (a margin so thin that any disruption could cascade through the interconnected web of financial and legal obligations).

As Bernstein Research analyst Stacy Rasgon observes, Altman “has the power to crash the global economy for a decade or take us all to the promised land. Right now we don’t know which is in the cards.” Further alarming signals come from veteran short seller Jim Chanos, who famously predicted the Enron collapse, highlighted a key contradiction: “Don’t you think it’s a bit odd that when the narrative is ‘demand for compute is infinite,’ the sellers keep subsidizing the buyers?” This echoes patterns from the dot-com era, when companies engaged in circular revenue deals to maintain growth appearances.

The opacity extends beyond individual transactions to the entire capital allocation mechanism. Altman himself admits “I don’t think we’ve figured out yet the final form of what financing for compute looks like,” even as OpenAI pursues a model where it will lease rather than buy AI processors to make debt financing more feasible. These creative financial instruments, from special purpose vehicles (SPVs) to circular equity investments to massive debt packages, create dependencies where one failure to execute may trigger an avalanche, as each participant’s ability to service obligations depends on continued exponential growth that history suggests is unsustainable. Even Jeff Bezos has warned that “investors have a hard time in the middle of this excitement distinguishing between the good ideas and the bad ideas,” noting the AI spending resembles an “industrial bubble” (sidenote: Many hyperscalers have been priced as high-growth tech companies, while in fact they are industrial players. It is just a matter of time before they will get repriced accordingly).

Finally, also Venture capital exhibits late-cycle behaviour. Tiger Global, Coatue, and SoftBank have marked down AI portfolios 30-50%. New investment slowed dramatically: AI funding dropped from $97 billion in Q4 2023 to $41 billion in Q2 2024. The smart money is retreating while retail piles in through ETFs and index funds.

Part 3: The Physical Limits of Silicon

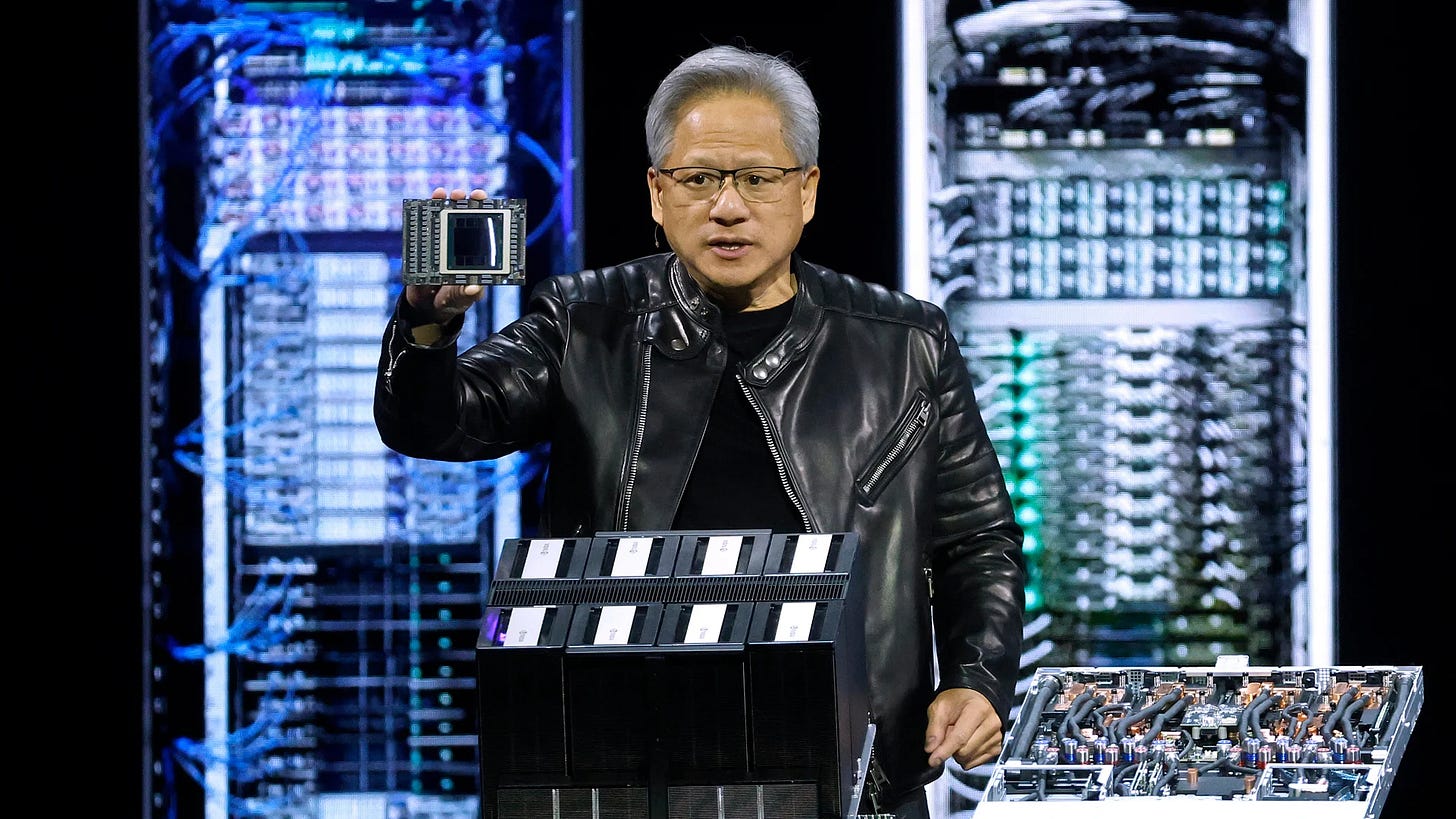

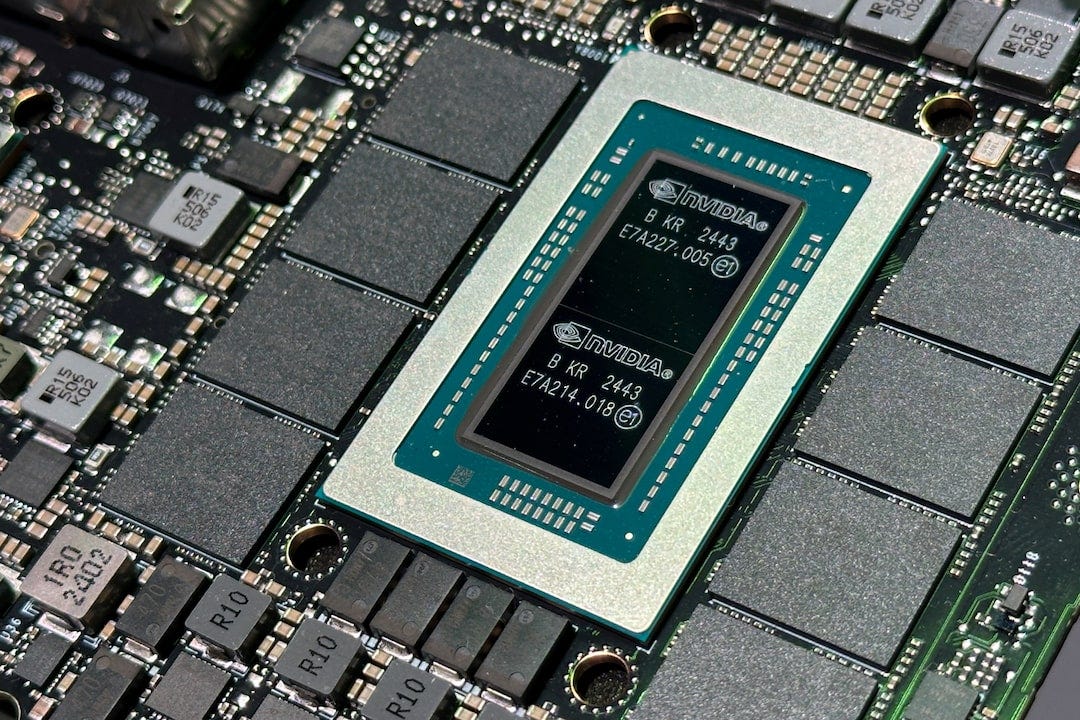

Computational speed has driven chipset innovation from early gaming applications (anyone who played Doom with a GPU card will know the difference) through Nvidia's 1993 founding and subsequent GPU dominance. This gaming-focused parallel processing expertise enabled cryptocurrency mining applications (2010-2018) before positioning Nvidia advantageously for the AI revolution around 2022-2023, driving substantial equity appreciation as GPU-accelerated computing proved commercially viable for AI training and inference. Indeed, Nvidia is a company to grew from pixels to prompts.

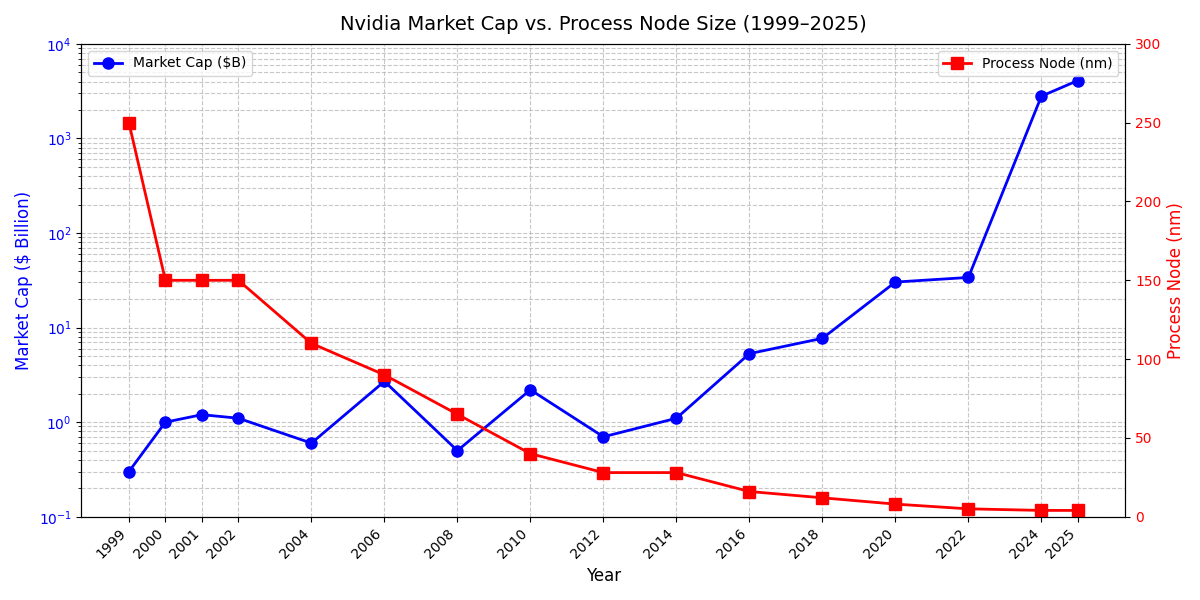

In general, chipset miniaturisation remains essential because smaller designs deliver higher transistor density, superior power efficiency, reduced signal latency, lower per-unit manufacturing costs, and improved thermal management. This aligns with Moore's Law principles, wherein continued exponential computational growth depends upon scaling down feature sizes to meet modern applications' demands.

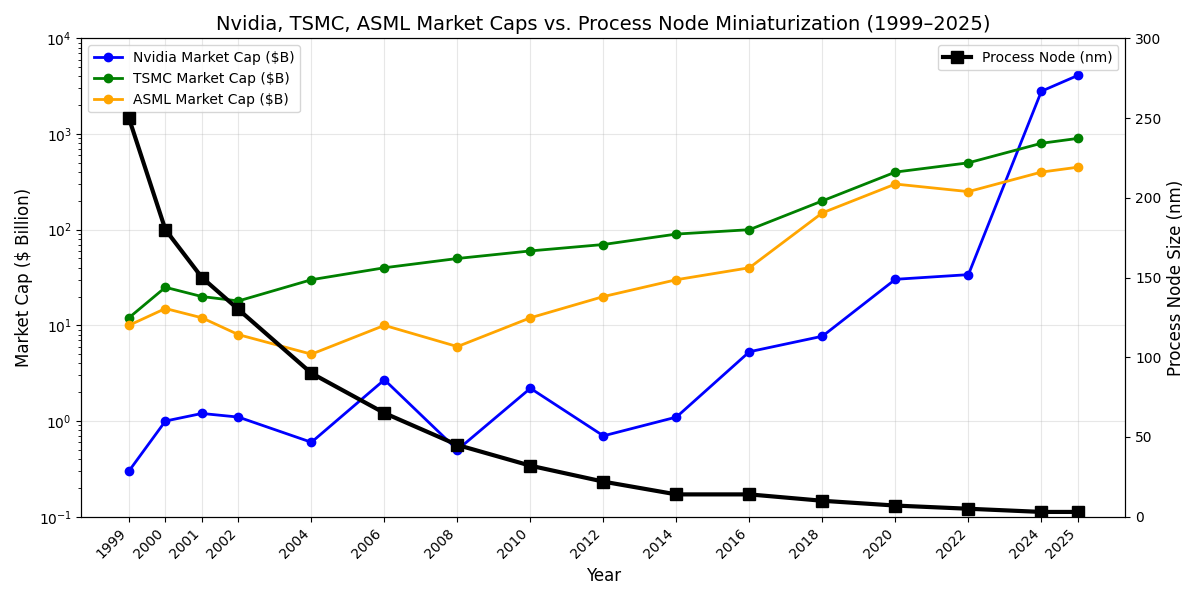

The techo-economic understanding is that the higher transistor density the more compute speed one can deliver. When applying this to Nvidia, we get the following graph:

While the meteoric rise of Nvidia’s stock market is also pushed between 2022 and 2023 by the expectations of LLMs, and not only the miniaturisation, there is a strong correlation (~.75) between transistor density and Nvidia’s market cap. In case of players that are closer involved with the transistor density play, such as ASML and TSMC, the correlation is even stronger.

However, the AI boom's fundamental assumption—that chips will become exponentially more powerful whilst remaining economically viable—faces mounting physical constraints. I want you to consider Moore’s Law strictly as a techno-economic model, where the physical barriers, such as atomic-scale limits, may very well introduce a slowdown on the growth of chipset manufacturer’s market caps.

The challenges are plenty. Already, current flagship models already require enormous computational resources costing tens of millions to train, whilst next-generation chips like Nvidia's B200 and GB200 consume 1,200 watts, pushing practical cooling and power limits. Future generations may demand 2,000+ watts, creating thermal densities that challenge the economic and physical viability of continued AI scaling. For decades, performance improvements in general-purpose CPUs and GPUs averaged significant annual gains, driven by transistor scaling under Moore’s Law. Since around 2010, the rate of these gains has slowed substantially as the industry has confronted physical limits like heat dissipation and ballooning costs for new manufacturing nodes, shifting innovation toward architectural specialisation and other techniques.

In various discussions, 2nm is generally accepted as nearing the “endgame” for silicon (below 1.5 nm nodes face severe quantum-tunnelling and cost walls), with 1nm being called “unrealistic” without new physics. What is clear is that the statement how “chips will become exponentially more powerful whilst remaining economically viable” is no longer a realistic view. 3nm is viable but costly; 2nm stretches economics; 1nm is infeasible. We can safely forecast, that by ~2030, density gains stop without any new technologies or breakthroughs (e.g., graphene transistors, glass, quantum computing, photonic computing).

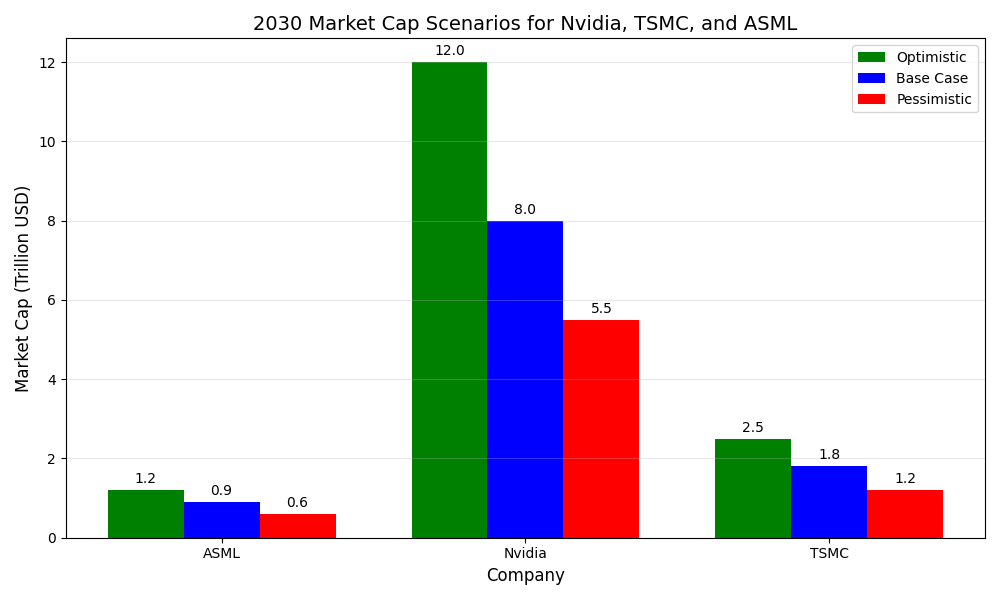

Nvidia’s $5T market cap (Oct 2025) assumes AI growth, but a scaling wall could trigger corrections if costs continue to outpace performance. Nvidia’s $4.1T valuation (P/E ~50x) assumes AI growth (e.g., $46.7B Q2 2025 revenue, 56% YoY), but slowdowns in density gains (2nm by 2026, 1nm speculative) risk corrections. Following scenarios could very well be possible outcomes over the next five years:

Constraints on transistor density will impact ASML and TSMC more directly than Nvidia due to their roles inlithography and foundry production, respectively. ASML’s EUV tools and TSMC’s advanced nodes (e.g., 3nm, 2nm) face immediate challenges from quantum tunneling, low yields, and escalating fab costs, directly affecting their margins and growth. Even when cosmetics are applied, or some last-mile solutions might delay the moment where Moore’s Law catches up, some point over the next few years physics will give the financial market a run for its money.

Zooming in on Nvidia, its traditional moat (integrated CUDA software, AI-optimized GPUs, and ecosystem) mitigates reliance on raw density gains by enhancing performance through software and architectural innovations. However, Nvidia’s primary risk lies in a potential downturn in AI sentiment, such as reduced hyperscaler spending or disillusionment with generative AI’s ROI, which could trigger significant valuation corrections, compounded by secondary risks like competition and supply chain disruptions.

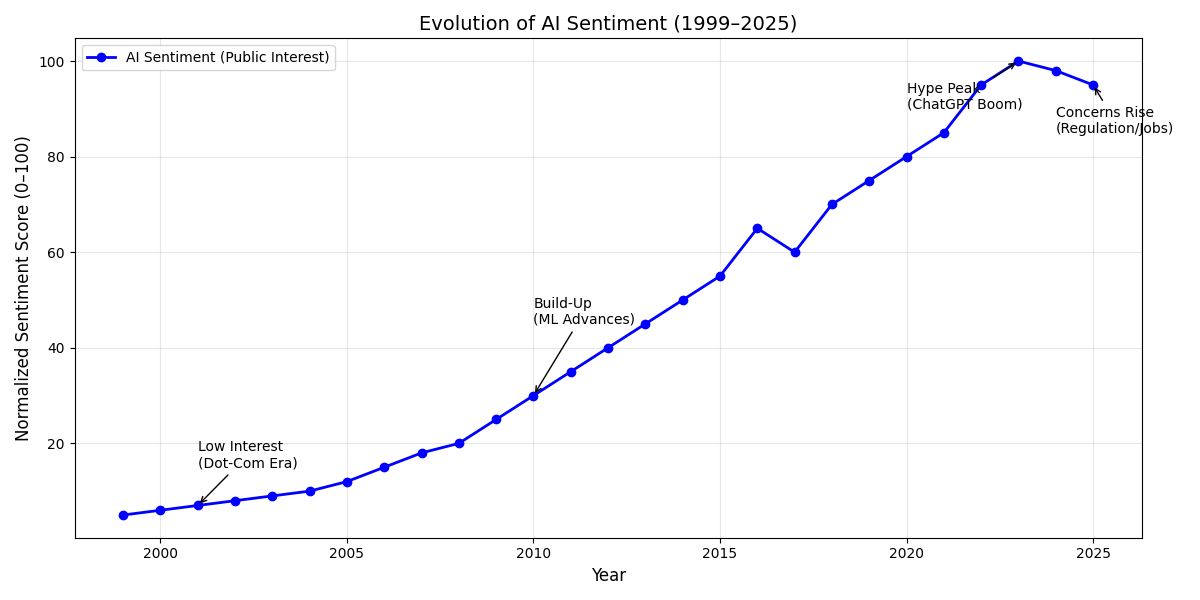

Part 4: From Obscurity to Ubiquity, AI’s Sentiment Evolution

AI sentiment is an important driver in terms of moving the market caps of AI companies, both in private and public markets. Analyzing Google Trends together with significant AI moments over the past decades indicates we are indeed in a historical moment where AI has never been more ubiquitously present in our daily lives as any point in time before.

Nvidia, the Canary in the Coalmine

Considering Nvidia’s stock price ($NVDA) as the proverbial canary in the coal-mine in terms of AI market capitalisations, its Q2/25 earnings call provided insights into a miss in datacenter revenues ($41.1b actuals vs. $41.3b projected) as well as investors that were priced “for perfection”, expecting another blowout to justify the $4T+ valuation. It seems “being merely on the mark, simply is not going to cut it”, translating to an overinflation of expectations. Nvidia’s Q3/25 earnings call were received positive by the markets, but taking a step back, I do have to make some critical notes:

Jensen casually claims to take “$35 billion out of $50 billion per gigawatt data center” (note: 1GW datacenter requires the equivalent of a nuclear reactor ➡️ the U.S. adds only ~30GW of total generation capacity annually ➡️ the assumption of “10 gigawatts” for OpenAI alone is fantasy without massive grid infrastructure changes).

Rubin “chips are in fab” but that’s 12+ months from revenue, without any hiccups (no idea how SK Hynix inventory shortage might impact Nvidia). Also, the “annual rhythm” strategy could lead to Osborne effect and inventory write-downs.

Questionable growth projections, with Nvidia projecting $3-4 trillion (AI) infrastructure spend by 2030. With current hyperscaler CapEx is $600B/year, this means a 40% CAGR for five years. With claims of taking “$35 billion out of every $50 billion”, this would mean ~$2.5 trillion in cumulative revenue by 2030 - essentially becoming larger than most countries’ GDPs.

The whole thesis hinges on “100x-1000x” compute requirements for reasoning AI, physical AI/robotics becoming mainstream, and enterprises actually deploying agentic-AI at scale. And none of these points have proven a solid ROI yet.

Indeed the proliferation of LLMs since 2022 has been remarkable, but post-2021 newcomers to the field must realise AI is more than GPT. The danger is not that GenAI replaces data scientists, but that it convinces amateurs they are one. Lowered barriers to entry and required expertise have reduced the need for AI-literacy. With the risk of sounding too straightforward; more naive people than ever before are using complex technology with insane expectations and lesser skills. This will create volatility at some point.

A Shift in Sentiment

Already, we are witnessing an increasingly negative sentiment about AI slop, lawsuits where customers claim chargebacks to consultants using AI generated texts, and industry leaders who are carefully picking their words when being pressed to answer whether or not to anticipate bubble-formation risks.

The volatility in sentiment, means the risk is increasing that even the slightest of hesitations, slowdowns or bad news could trigger a Sell-momentum. And already we see an emerging trend of AI bubble concerns, with recent selloffs in AI stocks (eg. post-August hype peak) and questions about ROI (eg. 95% of firms experimenting with AI see no revenue yet) contributing to this recent change in sentiment. After the recent GPT-5 release, Sam Altman’s (OpenAI) remarks how he feels the AI market is overhyped, or Eric Smith’s urge to Silicon Valley to “stop fixating on superhuman AI” warning how this obsession hinders useful innovation, or how long-term LLM critics, Yann LeCun and Gary Marcus have been warning about LLMs limitations and predicting failure of overpromises. It feels as if the leaders in the field of LLM-dominated AI narratives are trying to gently lower the expectations in order to avoid a runaway panic selloff, while insiders are taking some money off the table, such as Nvidia, CoreWeave, OpenAI, Eleven Labs, etc.

In the private markets for August 2025, global VC funding hit $17B, the lowest monthly total since 2017 (Crunchbase), with AI seed deals down 33% YoY. PwC's 2025 AI Predictions note "sharper ROI emphasis," with 80% of projects failing (RAND), leading to 50–60% of early AI rounds renegotiated or abandoned. Also in private investor chatrooms, discussing allocations, sentiments with regards to AI startups are returning back to normality. Recently, an investment group I’m part of declined to join a small allocation in Perplexity’s ongoing round at $20B valuation. Considering $148M in ARR in June 2025 means a 135x revenue multiple has gotten a bit too crazy for me.

Part 5: Nearing the Limits of LLMs

The grand narrative of generative AI, where large language models (LLMs) are viewed as precursors to artificial general intelligence (AGI), is fraying. Even the architects of the current AI boom now concede that the paradigm is hitting its natural boundaries. Accuracy, scaling, and output quality are all showing unmistakable signs of strain, as Silicon Valley is seeking to quietly back out of the AGI narrative.

LLMs remain systems of statistical pattern-matching rather than comprehension. Gary Marcus argued that hallucinations are not an occasional glitch but a structural property of models trained to predict the next token, not to verify truth.

Sam Altman, the CEO of OpenAI, has echoed similar caution. He publicly warned that using LLMs for therapy or critical decision-making is “deeply irresponsible” because these systems are unproven and unreliable. Even with “reasoning” upgrades, GPT-5 and its peers still produce confident falsehoods. The models’ capacity to simulate knowledge masks their inability to reason causally or verify claims. As Marcus observed, the industry’s epistemic problem is not computational but it is cognitive.

The scaling hypothesis, expressed by the belief that simply making models larger yields emergent intelligence, is faltering. Altman conceded in an interview with TechCrunch that “we’re at the end of the era where it’s gonna be these giant models […] we’ll make them better in other ways.” It was an extraordinary acknowledgment from the industry’s leading figure that size alone no longer guarantees progress. Gary Marcus described the same turning point: GPT-5’s release disappointed, and even long-time optimists such as Rich Sutton began tempering their expectations. High-quality human text may be fully mined by the end of the decade, while compute costs rise exponentially. In an October 2025 interview, Bezos warned that the AI sector risked becoming “an industrial bubble,” with capital inflows outpacing real technical progress.

The evidence is bipartisan: critics and creators alike see the ceiling. LLMs can predict but not understand, scale but not transcend, impress but not reason. The dream of AGI through language models is not a milestone ahead, but it’s a mirage. Altman’s and Bezos’s remarks hint that even those steering the field are preparing for a post-LLM future, one that demands architectures capable of genuine reasoning, not just next-word prediction. Steering away from the AGI narrative seems like a sensible thing to do.

Part 6: The Ecological Limitations and Impact of AI

The artificial intelligence revolution runs on an infrastructure that is increasingly straining Earth’s finite resources.

Water

Data centres currently consume approximately 560 billion litres of water annually globally, projected to rise to 1,200 billion litres by 2030. Already in 2023, data centres in the United States consumed approximately 17.5 billion gallons of water directly through cooling systems, whilst Google’s data centres alone consumed nearly 6 billion gallons of water in 2024, marking an 8 per cent annual increase. In the United States, hyperscale data centres alone will withdraw 150.4 billion gallons of water between 2025 and 2030, equivalent to the annual water withdrawals of 4.6 million households.

This voracious thirst extends beyond cooling towers, a single 100-megawatt data centre consumes about 2 million litres of water daily, equivalent to the consumption of 6,500 households. Texas exemplifies this crisis: data centres will consume 49 billion gallons in 2025, soaring to 399 billion gallons by 2030, representing 6.6 per cent of the state’s total water usage. Morgan Stanley calculates that by 2030, global data centres will require 450 million gallons daily, which is the equivalent to 681 Olympic-sized swimming pools of freshwater. In Georgia, residents reported problems accessing drinking water from their wells after a data centre was built nearby.

Water-stressed regions face particular challenges. Microsoft acknowledges that 42 per cent of the water it consumed in 2023 came from areas with water stress, whilst demand spikes during hot periods when communities most need water themselves.

Still, the concentration extends beyond manufacturing to resources these facilities consume. TSMC’s Taiwan operations use approximately 6% of the island’s electricity. Water usage proves equally staggering: advanced fabs consume 15 million gallons daily. Taiwan’s 2021 drought forced semiconductor plants to truck water, briefly threatening global supplies.

Lithium presents different challenges. While geographically dispersed, battery-grade lithium for datacenter backup systems faces structural deficits. Current production of 100,000 metric tons annually must triple by 2030, yet new mines average 7-10 years from discovery to production. The “lithium triangle” of Argentina, Bolivia, and Chile controls 60% of reserves but faces water scarcity directly conflicting with extraction needs.

Energy

The strain on electrical grids proves equally alarming. Data centres consumed about 17 gigawatts of power in 2022, with global data centre electricity consumption to grow from 415 terawatt-hours in 2024 to 945 TWh by 2030 (or the equivalent to Japan’s entire annual electricity consumption). In the United States alone, data centres will account for nearly half of electricity demand growth, ultimately consuming more electricity for data processing than for manufacturing all energy-intensive goods combined, including aluminium, steel, cement, and chemicals. This surge forces utilities into uncomfortable positions: wholesale electricity costs have increased by as much as 267 per cent in areas near data centres over the past five years, with residential customers bearing these costs through higher bills. The grid infrastructure struggles to accommodate this explosive growth. Nearly 2 terawatts of clean energy (or 1.6 times current US grid capacity) remains delayed in interconnection queues, forcing some operators to rely on fossil fuel backup systems. Virginia’s Loudoun County exemplifies this tension: residents endure constant noise from natural gas turbines powering off-grid data centres, with decibel readings reaching 90dB, which is well above comfortable levels.

Pollution

The environmental burden extends upstream to the rare earth elements essential for data centre hardware. Rare earth extraction requires cocktails of chemical compounds including ammonium sulphate and ammonium chloride, which create air pollution, cause erosion, and leach into groundwater. In China’s Baotou region, the world’s rare earth capital, mining operations have been linked to severe environmental degradation and health problems for local communities. The process leaves behind radioactive thorium and uranium alongside other heavy metals, creating lasting environmental legacies. The sustainability paradox remains stark: whilst rare earth elements enable energy-efficient technologies, China controls 60 per cent of global production and 85 per cent of refining capacity, creating supply chain vulnerabilities and environmental injustices. Extraction requires tremendous amounts of energy and water whilst generating large quantities of waste often mixed with radioactive elements.

The growing frequency of natural disasters (ie. the Texas’s freeze, or Malaysia’s flooding) creates additional vulnerabilities in an already concentrated supply chain, compounding risks from geopolitical tensions and making it increasingly difficult to distinguish between natural disruptions and potential hostile actions.

As AI capabilities expand exponentially, the ecological debt accumulates silently. Without fundamental shifts in cooling technologies, renewable energy deployment, and rare earth recycling (currently below 10 per cent in the United States) the digital revolution risks becoming an environmental catastrophe.

A Parting Thought

Today’s financial markets are sustaining extraordinary AI valuations through increasingly creative structures, from the magnificent seven’s index concentration to private market marks that defy traditional metrics. This determination to extend the rally despite mounting evidence of constraints signals dangerous divergence between narrative and reality.

Based on factual analysis, I have become convinced we’re approaching hard limits across three critical dimensions. Physics (first): sub-3nm chip manufacturing faces steep barriers that at some point no amount of capital can overcome. Infrastructure (second): datacenters already strain power grids and water supplies. Algorithms (third): current architectures show diminishing returns to scale, with each GPT iteration requiring exponentially more compute for marginally better performance. These aren’t temporary bottlenecks but rather fundamental constraints that invalidate the exponential growth assumptions underlying current valuations.

My assessment remains simple. I believe we’re approaching significant repricing in AI equities, some money is quietly repositioning. I anticipate, the rally will continue for another while, but its foundation weakens with each passing quarter. For the dominant players in this space, and specially OpenAI’s Sam Altman and Nvidia’s Jensen Huang, I believe they will increasingly steer the narrative away from AGI’s excessive expectations, as I am sure both gentlemen are fully aware of the power they hold in shaping the markets. One wrong statement and they could be tanking everyone. I expect we will be reading a whole lot of mighty careful phrasing over the next few quarters when replying to critical questions, and hyperbole speech in new, unproven and emerging markets (ie. robotics, etc.).

The dotcom parallel remains instructive. Cisco, trading at 200 times earnings in March 2000, collapsed 89% by 2002, yet emerged as the Internet’s backbone infrastructure provider, eventually justifying those early believers who understood the technology whilst recognising the valuation excess. Today’s AI revolution follows similar contours: transformative technology, valid long-term thesis, but valuations that have decoupled from any reasonable discount model. TCP/IP did change the world, just not quickly enough to justify 1999’s prices.

To this equivalent, and in the long run, AI will transform civilisation, but not rapidly enough to support its short-term’s multiples.