On Apple's Illusion of Thinking

How Apple's latest critiques on LLMs may be a subtle foreshadowing of Apple's WWDC 2025 statements on AI

Those working in the AI community already understood that LLMs can be considered autocorrect on steroids. Yet, technically, we should call it next token prediction, even though that’s not entirely correct either as it's not just surface-level statistical correlations - there's evidence of deeper, more structured knowledge representation happening internally.

But are LLMs nothing but stochastic parrots, or are we attributing human characteristics - such as reasoning or thinking - in an appropriate way, by anthropomorphizing AI? By now, the limitations of statistical models (like LLMs) are well understood and how this severely constrain capturing causal relationships without explicit causal modeling.

If anything, I believe Apple’s latest paper holds little new insights for those that work in the field of AI, but it might send a wakeup call to those that only holds surface-level knowledge on the industry, illustrating how the apparent reasoning abilities of LLMs are fundamentally flawed and brittle.

But what a timing. Might there be a reason in Apple’s release of this research paper two weeks ahead of WWDC 2025 ?

An Overview of Apple’s Recent Critiques on AI Reasoning

When Mehrdad Farajtabar, one of the paper’s authors, announced their first findings on X back in 2024, they claimed:

… we found no evidence of formal reasoning in language models …. Their behavior is better explained by sophisticated pattern matching—so fragile, in fact, that changing names can alter results by ~10%!

In this earlier 2024 paper, the Apple team investigated the mathematical limitations of LLMs and developed a task, called GSM-NoOp. Here, seemingly relevant statements were added to the questions that were, in fact, irrelevant to the reasoning and conclusion. The majority of the models failed to ignore these statements and blindly converted them into operations.

This brittle performance reveals that LLMs are not truly reasoning; they are merely regurgitating learned patterns and can be thrown off by changes that a genuine reasoning process would easily accommodate. LLMs lack the robust, systematic reasoning capabilities that human intelligence displays, and their successes are largely confined to the boundaries of their training data.

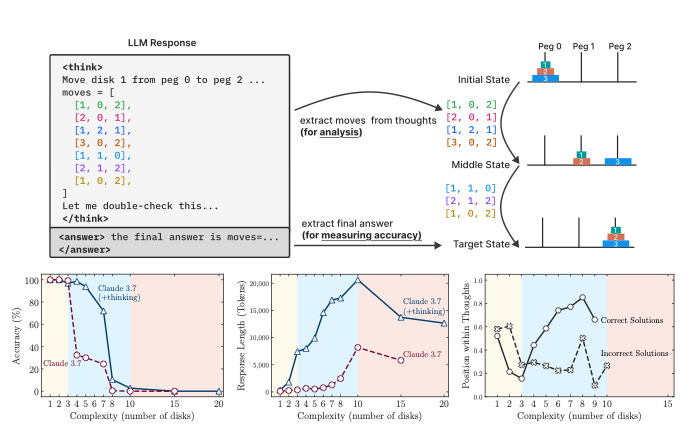

The 2025 paper demonstrates that LLMs/Large Reasoning Models (LRMs) fail to consistently apply deterministic algorithms to solve puzzles like the Tower of Hanoi, instead relying on non-generalizable reasoning that collapses beyond a model-specific complexity threshold. Although these puzzles have well-defined recursive solutions, such as T(n) = 2T(n-1)+1 for Tower of Hanoi with exponential time complexity (2^n-1), LRMs do not leverage this structure. They appear to depend on learned patterns that break down as the number of variables increases. Remarkably, even providing the explicit algorithm does not prevent this collapse, underscoring limitations in logical step execution.

While these findings highlight the constraints of token-based reasoning, they build on already known limitations in LLMs’ reasoning capabilities. While it is not a new finding in the AI community, the usage of controlled puzzle environments and detailed trace analysis is novel.

So I am not betting on a market-crash following the release of Apple’s paper. So, calling it a knockout blow, as Gary Marcus, claims might be taking it too far, although I understand Gary’s annoyance with the overinflated expectations of the uneducated masses. It really is worth reading up on Gary’s earlier statements, as well as Subbarao Kambhampati’s work.

Anthropomorphization, Cognitive Biases, and Synthetic Reasoning

Humans often attribute human-like traits, intentions, or understanding to AI systems, a phenomenon known as anthropomorphization. This tendency stems from cognitive biases, such as the inclination to project familiar mental models onto unfamiliar systems. For instance, when large language models (LLMs) produce coherent text, users may assume they possess human-like reasoning or consciousness, mistaking linguistic fluency for genuine comprehension. This bias, rooted in our pattern-seeking nature, obscures the reality: LLMs operate via statistical pattern recognition, not cognitive processes akin to human thought. And although in terms of grammar, LLMs create very accurate prose, its content may be severely lacking.

Cognitive biases like the illusion of explanatory depth - where people overestimate their understanding of complex systems - further amplify anthropomorphization. When LLMs generate responses that mimic logical or causal reasoning, users may infer a deeper understanding that isn’t there. Research shows that LLMs lack explicit world models or causal reasoning capabilities, relying instead on correlations in training data. Their outputs, while sophisticated, are probabilistic approximations, not reflections of a structured understanding of reality.

Anthropomorphization also has practical implications. Overtrust in AI systems, driven by cognitive biases, can lead to misuse or overreliance, as seen in cases where users accept LLM outputs without scrutiny. Conversely, understanding AI’s limitations fosters realistic expectations and drives innovation toward true synthetic reasoning. Embodied AI, such as robotics, may bridge this gap by integrating perception, action, and reasoning, creating systems that interact with the physical world in ways that align more closely with human cognition.

Towards a Better Understanding of Human Language Generation and Reasoning

When using terms in AI like reasoning, thinking, or intelligence, caution is essential. Humans and large language models (LLMs) generate language through fundamentally different processes.

Human speech production is not a simple word-by-word prediction; it involves distinct stages. In cognitive models (such as Levelt’s model), speaking is divided into three broad stages: conceptualization, formulation, and articulation. In conceptualization, humans generate a pre-verbal idea or message, determining what they intend to express. Next, during formulation, this concept is translated into linguistic form by selecting appropriate words and grammar. Finally, articulation activates motor systems to produce speech sounds physically.

In simpler terms, humans “predict ideas” first and then encode them in words, rather than predicting one word at a time without a plan. Brain Imaging technology confirms this. Thoughts precede language in humans. Functional MRI (fMRI) and other neuroimaging studies confirm this staged process. Initial brain activation occurs in semantic and conceptual regions (e.g., left middle temporal gyrus and medial frontal/parietal cortex), reflecting idea formation before linguistic formulation begins. Subsequently, language-specific areas, notably Broca’s and Wernicke’s areas, activate to handle words, syntax, and phonology. Finally, motor regions, including the primary motor cortex and supplementary motor areas, engage to execute speech articulation.

Thus, human language generation involves clearly distinct neural stages; conceptual thought formation, linguistic encoding, and physical articulation. The brain first finds the meanings and appropriate words, then assembles the sounds. These are distinct steps with distinct neural signatures, and is very unlike how LLMs are working. Even though researchers are investigating how ontologies can boost RAG/LLM systems, we still might be a long way off of having AI able to understand meaning.

Asking a Controversial Question

But LLMs are not the only systems that fail to address logical reasoning. Humans know a thing or two about that as well. A controversial question might become, what if the limitations we’re observing in LLMs are actually mirroring the way human beings think and reason?

Inconsistent performance is a striking shared trait. Just as humans can falter under fatigue or ambiguous phrasing, LLMs show significant accuracy drops with minute changes, like swapping a number or adding irrelevant info in a puzzle. Apple observed that these models sometimes reduce their “thinking effort” when problems grow harder. Something we could call a figurative “quitters’ mentality”, or loss aversion.

Far from being a flaw exclusive to artificial systems, this mirrors the instability of human judgment, suggesting LLMs don’t deviate from, but rather mimic aspects of human reasoning behaviour. And what is the difference between the confident hallucinations of LLMs versus the Dunning-Kruger cognitive bias that hits us all at some point in time?

Apple’s study highlight how LLMs, like humans, can be led astray by irrelevant details. Something we all might have experienced in our daily lives. Apple found that introducing trivial distractions could cause performance drops of up to 65%, even when the models knew the correct solution. This parallels well-known cognitive biases like anchoring in human decision-making. The convergence of all of these behaviours; decision fatigue, framing, effort variability, and sensitivity to noise, and many more, raises a profound question: are we witnessing limitations of artificial intelligence, or a digital mirror reflecting the inherent quirks of human reasoning?

Research suggests that decision-making and judgement is often an act of intuitive and rapid-response behaviour, where humans construct logical-sounding justifications for these gut reactions after the fact. This makes us, humans, mere post-factum narrative generation machines. It makes one wonder if perhaps setting human reasoning as the bar that AI needs to achieve isn’t just too low.

Interesting to see how new research explores frameworks like the Computational Thinking Model (CTM) which integrates live code execution to enforce iterative, verifiable reasoning, potentially mitigating LLMs’ tendency to "quit" on hard problems. Similarly, fostering a growth mindset in humans encourages persistence through challenges, suggesting that AI could benefit from analogous mechanisms. The CTM approach could be considered as neuroplasticity when interpreted from a human perspective.

As you can already feel, by asking the question backwards, we might engage in very interesting new perspectives. One without definite answers.

Towards a Next Generation of Synthetic Understanding

Yet, skeptics and optimists alike agree that next-word prediction alone cannot produce genuine understanding or causal reasoning about the world. We should not mistake are wants for reality. Human cognition relies on mental models of reality, shaped by sensory experience and logical reasoning, which guide language production. In contrast, large language models (LLMs) generate text based on statistical patterns, producing outputs that may appear logical but lack an explicit model of the world.

Equating LLMs’ capabilities to human-like knowledge oversimplifies their function. While their internal structures may form patterns we interpret as “concepts,” these are not grounded in logic or real-world understanding but emerge from mimicking human language. To advance AI, many researchers propose integrating LLMs with systems that emulate human cognition, such as modules for explicit reasoning, memory, or sensory perception.

For instance, Yann LeCun advocates for architectures like Joint-Embedding Predictive Architectures (JEPA), where AI learns world models through environmental interaction, enabling human-like planning and comprehension.

This need for embodied, interactive systems underscores why robotics and embodied-AI may drive the next wave of AI innovation. By combining perception, reasoning, and physical interaction, robotics could unlock capabilities far beyond today’s text-based models, paving the way for more intelligent and adaptable machines.

One Last, Very Speculative, Thing

So, with WWDC’25 coming up later today, it’s time to speculate.

So, why would a company that tried to reframe the term AI as Apple Intelligence in 2024, be warning against the overhyped expectations of AI? Skeptics claim Apple has missed the boat, believers say it’s just patient innovation.

Yet through the fog of war, some things become clear:

It is clear that this latest research paper’s intended audience is the media, business people, and financial markets. For many AI experts and researchers this paper holds little or no new insights, except for a methodology that proves what we already know.

The general perception in the markets is that Apple is lagging in terms of AI, and innovation in general. After 2024’s bold announcements on AI adoption within the Apple ecosystem, and lacklustre rollout in 2025, many have concerns about Apple’s AI strategy.

The stock price of Apple (AAPL) has been down since this year’s high (Jan 2025), and been showing sideways activity ever since. Still, Apple has US$ 28 billion in cash and cash equivalents (down US$ 1,7 billion since last year) and with a market cap of US$ 3 trillion, there still is plenty of cash and stock to potentially acquire value.

Former Apple designer Johny Ive has partnered with OpenAI to work on OpenAI’s iPhone-killer device, while late Steve Jobs’ wife (Laurene Powell Jobs) has been an investor in Ive’s company, stating she is expecting big things. Coming from someone that witnessed up close how big things were come to be, including the iPhone, this means something.

Without dismissing the validity of Apple’s latest paper on AI, one might question how Apple plans to integrate AI within their ecosystem.

One particular pragmatic speculation might be how Apple will be levering its privacy and hardware ecosystem and choose on-device AI implementations as their cornerstone strategy. Apple still is one of the few companies that control both the software and hardware side of things.

The paper might very well have been a setup to buy patience in Apple's integration of AI features. So, one particular speculation I wish to make is how one might hear Tim Cook say something along the lines as how “the current state of technology in AI is not living up to Apple’s vision for AI yet”. And “until that isn’t the case, Apple will continue to diligently roll out AI only when it lives up to the privacy and quality that Apple products are known for”.

I guess we’ll need some more patience…

** Update 10/06: WWDC25 is over, and as speculated above Apple indeed announced (a) how developers will be able to embed on-device AI models (without any further specifics), and (b) how “[This work] needed more time to reach our high quality bar and we look forward to sharing more about it in the coming year”, when Apple software chief Craig Federighi discussed Siri’s AI overhaul. This sounds as an eerie echo of Tim Cook’s statement earlier during Apple’s last quarterly earnings call, where Tim declared to “… need more time to complete our work on these features so they meet our high quality bar”.